June 18, 2025

The Quest to Streamline Local TV Advertising: Automation, Programmatic, and the Path Forward

By Brian Thoman, WideOrbit Chief Technology Officer Local TV advertising continues to play a vital role in reaching high-value audiences, but buying and selling remains…

July 3, 2025

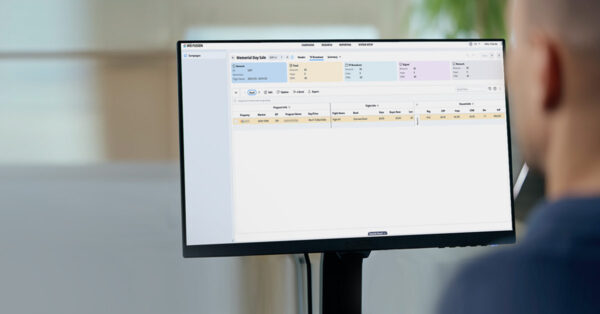

Centralized Cross-Platform Campaigns and Unified Sales Workflows with WO Fusion

In today’s fragmented media landscape, advertisers expect more than just reach – they want seamless cross-platform campaigns that deliver measurable results across linear and digital.…

June 11, 2025

Cross-Platform Ad Sales: What It Means and Why It Matters

In today’s rapidly evolving media landscape, advertising is more complex than ever. As media consumption spans linear, streaming, on-demand, and digital platforms, the tools, workflows,…

May 30, 2025

Unlocking the Power of Integrated Ad Tech in a Converged Media World

By Brian Thoman, WideOrbit Chief Technology Officer In today’s rapidly evolving media landscape, the rise of streaming and digital platforms has transformed how advertising is…

May 13, 2025

Introducing Browser Access for WO Automation for Radio: Record Remote Voice Tracks Anytime, Anywhere

More than ever, radio stations today are challenged to provide the best experience for their audience while keeping costs tightly under control. With remote voice…

April 16, 2025

Is the ‘Consultative Sales’ Strategy Hurting the Sales Process?

Last month at Borrell Phoenix, Devlin Jefferson, WideOrbit SVP, Professional Services, teamed up with Brock Berry, CEO and Co-Founder of AdCellerant, to present a session…

March 27, 2025

Turning Digital-Only Clients into Legacy Buyers (and Vice Versa)

At Borrell Phoenix earlier this month, Devlin Jefferson, WideOrbit SVP, Professional Services, had the privilege of moderating a panel discussion on the importance of converting…